Introduction: A Fork in the Road for More Retail’s Cloud Journey

Late last year, our More Retail engineering team found itself at a crossroads. We were running dozens of microservices on Amazon EKS (Elastic Kubernetes Service), the powerful Kubernetes-based platform, but things weren’t as smooth as we hoped. During one peak shopping weekend, our systems strained under load. Engineers were scrambling to tweak Kubernetes node pools and restart pods, instead of focusing on delivering new features. It became clear that this wasn’t just a technical hiccup – it was a business challenge. Every minute spent managing infrastructure was a minute lost on innovating for our customers. We needed to ask ourselves a tough question:

"Is EKS the right platform for our needs, or is there a simpler, more cost-effective path forward?

That question sparked a journey. In this article (or should we say recipe, in true Cookbook style), we’ll share the narrative of how More Retail navigated from the complex waters of EKS to the calmer seas of Amazon ECS. This isn’t a dry technical migration story; it’s a tale of challenges faced, decisions made, and lessons learned – all with the goal of making our technology serve our business better.

Wrestling with EKS – The Struggles Were Real

When we first adopted Amazon EKS, we were excited to harness the power of Kubernetes. And EKS did serve us well for a time, allowing us to containerize applications and deploy at scale. But as More Retail grew, cracks began to show in our EKS experience. We’ve heard the saying “with great power comes great responsibility” – Kubernetes gave us power, but it demanded a lot of responsibility (and time) in return.

Operational Overhead: One of the immediate pain points was the operational burden. Running EKS meant managing our own cluster components – from scaling worker nodes to handling updates of the control plane. Yes, AWS takes care of the Kubernetes control plane uptime, but everything else – scaling, upgrades, plugin management – was on us. We needed specialized Kubernetes expertise on the team, and a significant chunk of our engineers’ time went into simply keeping the lights on for the cluster. In fact, Kubernetes’s complexity often meant dedicating team members full-time to cluster maintenance. (Anecdotally, one Reddit discussion noted a 2,000 CPU EKS cluster required 2-3 full-time people to maintain - reddit.com) While our setup wasn’t that massive, we felt the strain – every Kubernetes version upgrade or misbehaving pod could turn into an all-hands-on-deck situation.

Unexpected Issues: Despite our best efforts, we ran into mystifying errors and reliability problems. For example, at times our services would throw inexplicable 502 errors under load or our logging pipeline from EKS would falter just when we needed those logs the most. When we looked up if we were making a mistake, we found that we weren’t alone in this kind of struggle – even other companies like OOTI encountered frequent 502 timeouts, headaches with log processing, and complicated SSL setups while on EKS (doit.com). These issues weren’t just minor annoyances; they threatened the stability of applications running in production. In a retail context, esp. in Grocery in India, an unstable app isn’t just a technical issue, it’s lost revenue and a lost customer. Our team often had to implement workarounds for Kubernetes ingress quirks or adjust cluster autoscaler settings during high traffic events to prevent downtime. It felt like we were juggling knives – one slip and a critical service could go down.

Tooling and Integration Gaps: Running EKS meant we also had to deploy and maintain a suite of supporting tools. Need an ingress controller for routing traffic? We had to install and manage one (like Nginx or ALB Ingress Controller). Need monitoring? We spun up Prometheus and Grafana, and fretted over their scalability. Logging? We set up Fluentd/Fluentbit to push logs to CloudWatch. Every integration with AWS services (like IAM roles, load balancers, or CloudWatch) required custom configuration to bridge the Kubernetes world with AWS’s ecosystem. Over time, it felt like we were re-building features that AWS native services already offered, essentially duplicating effort. As our devops lead @Akshay quipped, “we were maintaining a Ferrari to deliver groceries – powerful, but maybe overkill for what we needed day-to-day.”

Costs Creeping Up: Then there was the cost factor – both human and machine. On the AWS bill, EKS itself adds a $0.10 per hour per cluster management fee. That’s $72 a month for a single cluster, which isn’t earth-shattering, but in our case we had multiple environments (dev, staging, prod clusters), and it added up over the year. More significantly, because we had to keep spare capacity in our Kubernetes nodes to handle traffic spikes (to avoid those dreaded pod unschedulable issues), we often paid for compute that sat idle most of the time. We tried to optimize with the cluster autoscaler, but scaling nodes up and down in EKS wasn’t as instantaneous as we wished. Compared to AWS’s native auto-scaling solutions, our Kubernetes setup appeared to be cost-inefficient at times. And let’s not forget the human cost: the hours our talented engineers spent babysitting the cluster could have been spent building new features for More’s customers. This is an “opportunity cost” that is hard to quantify, but we felt it daily.

After a particularly rough week of fighting fires on EKS – including a late-night incident where a misconfigured Kubernetes DaemonSet took down a chunk of our capacity – our team sat down to reflect.

Were we doing the right thing for the business by sticking with this setup?

The answer was increasingly “no.” It was time to consider a change.

Choosing a New Path – Why We Bet on ECS

Realizing that our container orchestration was causing more pain than gain, we started exploring alternatives. The most obvious contender in AWS was Amazon ECS (Elastic Container Service). Admittedly, moving to ECS felt a bit like “going back to basics.” Kubernetes is the cool kid on the block with endless flexibility; ECS is often seen as the simpler, maybe less glamorous sibling. We had to check our assumptions and pride at the door. This decision wasn’t about using the trendiest tech – it was about finding the right tool for More Retail’s job.

We took a hard look at what AWS ECS could offer and how it stacked up against EKS for our needs:

-

Simplicity & Lower Ops Overhead: The biggest draw of ECS was its promise to take over much of the orchestration heavy lifting. With ECS, AWS handles the cluster management. There’s no separate control plane for us to maintain (and no control plane fee either). We wouldn’t need to worry about upgrading master nodes or compatibility between Kubernetes versions and our application pods. Essentially, ECS offered to free us from the “cluster caretaker” role. For our team, that meant we could potentially save those on-call nights and refocus our energy on development. We saw validation of this in the industry too – AWS case studies like Vanguard have shown that using managed services (like ECS) removes the need for IT teams to manage underlying servers, giving developers more time to innovate (Vanguard even cut their deployment time drastically, from 3 months to 24 hours, by removing undifferentiated heavy lifting). That was a compelling story: more time for our developers equals faster delivery of features to the business.

-

Deep AWS Integration (The “AWS-native” Advantage): One of ECS’s strengths is that it’s built into the AWS ecosystem from the ground up. We realized we were already all-in on AWS for networking, load balancing, security, and more – so why not use a service that speaks AWS natively? For example, in ECS each task can easily assume an IAM role without extra plugins (no more fiddling with Kubernetes IAM bindings and service accounts). CloudWatch logs and metrics for ECS tasks are a few clicks away, whereas with EKS we had to pipe everything in. And using AWS Application Load Balancers with ECS is straightforward, as ECS can manage the target groups for you. This tight integration was very appealing. We came across a guide that put it well: ECS integrates tightly with other AWS services like Fargate (for serverless containers), IAM (fine-grained access control), and CloudWatch (monitoring and logging), making it easier to build a cohesive AWS-centric infrastructure. In short, ECS promised to play nicely with all of AWS’s managed services – a bit like adding an ingredient that binds all the flavors together in a recipe.

-

Cost Considerations: Cost was a big factor in our decision, and we looked at it from multiple angles. First, there’s the direct cost of services. As mentioned, EKS has an extra hourly charge per cluster

, whereas ECS has no additional cost for the control plane – you only pay for the underlying EC2 instances or Fargate runtime. Eliminating that fee was one small win. More importantly, we believed ECS would let us use resources more efficiently. We were particularly intrigued by running ECS with AWS Fargate, which is a serverless compute engine for containers. With Fargate, we’d no longer have to run EC2 instances 24/7 for our cluster; instead, AWS would provision just the right amount of CPU/RAM for each container task. No more, no less. This granular allocation could improve our resource utilization. It aligned with what we learned externally too: ECS can be more cost-effective than EKS, especially when combined with AWS Fargate, since Fargate eliminates the need to provision and manage EC2 instances, potentially reducing operational costs and waste. Additionally, ECS would make it easier to use Spot Instances for our containers (something that’s possible on EKS but simpler to manage in ECS). In fact, the team read about OOTI’s migration where after moving to ECS, they leveraged short-lived tasks and Fargate Spot to achieve significant cost savings through optimized resource use. This was music to our CTPO's ears – and to ours as well!

-

Sufficient Scalability & Features: We were careful not to trade away critical capabilities. Kubernetes is known for its powerful scaling and flexibility. Would ECS keep up with our growth and needs? We were happy to learn that ECS is extremely scalable (it runs thousands of tasks at Amazon and other companies), and it supports advanced features like autoscaling, rolling deployments, and load balancing out-of-the-box. For example, ECS can orchestrate deployments across multiple Availability Zones and keep services healthy via built-in integration with health checks. Our team’s experiments and reading gave us confidence that ECS could handle More Retail’s traffic patterns. We even found that some features that were add-ons in Kubernetes (like scheduled tasks or batch jobs) could be achieved in ECS using native AWS services (like Amazon EventBridge for cron schedules, or AWS Batch integration). It was a different approach – somewhat less “one platform does it all” than Kubernetes – but it fit well with our philosophy of using the right service for the right job. We realized we didn’t need the vast ecosystem of Kubernetes plugins; we just needed a stable, efficient way to run our containers at scale.

-

Team Fit and Learning Curve: Another consideration was our team’s familiarity and the learning curve. Adopting ECS would mean reorienting how we define and deploy services (no more

kubectl, hello new concepts like Task Definitions and ECS Services). But compared to the steep learning curve we’d climbed with Kubernetes, ECS felt refreshingly straightforward. In many ways, ECS abstracts away the complexity. As a startup analogy from a blog put it: if your team is new to containers or wants less complexity, ECS offers a quicker path with a gentler learning curve. We figured our developers would spend less time dealing with YAML files and Kubernetes object intricacies, and more time with high-level definitions that mirror our application architecture. This was largely true in practice – after a bit of initial training, many developers found deploying to ECS simpler than to EKS.

With these points in mind, the decision was becoming clear. Our rationale for choosing ECS centered on a promise of lower management effort, tighter AWS integration, and better cost efficiency – all of which ultimately serve the business by improving reliability and freeing up our people to focus on business-value tasks. It wasn’t a decision made lightly; we held several architecture reviews, consulted some AWS solution architects, and even spoke with another company that had made a similar switch. In the end, the evidence was compelling that ECS could solve many of the pain points we had with EKS. The potential benefits for More Retail – in cost savings and in faster innovation – were too great to ignore.

Finally, with excitement (and a healthy dose of nerves), we decided to embark on the migration. We had our destination. Now we needed a plan to get there.

The Migration Journey – From Plan to Execution

Having decided that “ECS it is!”, we knew we had to execute the migration carefully. This wasn’t just flipping a switch. We’re talking about moving the underlying platform for dozens of live applications that More Retail relies on daily. Our goals were to make the transition incremental, with minimal disruption, and to bring everyone along the learning journey. Here’s how we orchestrated our move from EKS to ECS, step by step:

1. Laying the Groundwork – Planning and Preparation: We kicked off with a thorough audit of our existing EKS workloads. We catalogued each microservice, noting its resource requirements, any special configuration (like ConfigMaps, volumes, secrets), and how it interfaced with other services. This inventory was crucial – it was like writing down all the ingredients before cooking a complex dish. We also identified Kubernetes-specific components we’d have to replace. For instance, we used Kubernetes CronJobs for scheduled tasks and Custom Resource Definitions (CRDs) for a couple of operators. We planned alternatives for those (such as using AWS EventBridge Scheduler for cron jobs, and in one case, redesigning a workflow to remove the need for a custom operator).

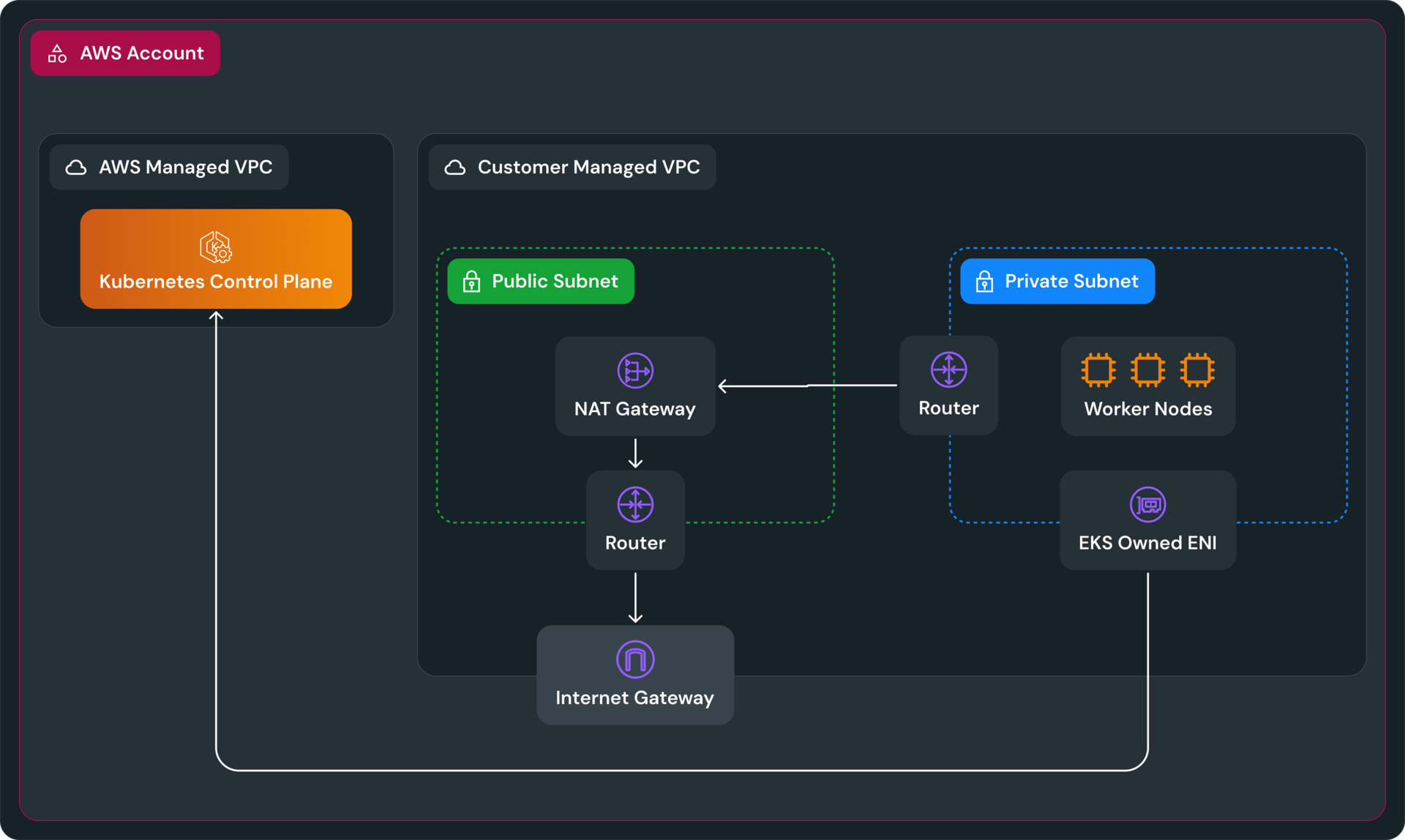

At this stage, we also set up the basic ECS infrastructure in parallel to our running EKS clusters. AWS makes it easy to get started: we created a new ECS cluster (backed by Fargate so we wouldn’t need to manage EC2 instances at all) and configured the networking (VPC, subnets, security groups) similarly to our EKS cluster’s setup. Think of this as preparing a new kitchen while the restaurant (our production) is still open – we had to ensure both old and new environments could run side by side for a while.

Team Enablement: We didn’t want our engineers flying blind into ECS, so we did quick internal workshops on ECS concepts. We showed how an ECS Task Definition is analogous to a Pod spec, how an ECS Service is like a Deployment, and how service discovery, config, and secrets work in ECS (leveraging AWS Cloud Map and AWS Secrets Manager, for example). The team’s initial apprehension gave way to curiosity as they saw that most concepts carried over, and some things were actually simpler (for example, connecting an ECS service to an ALB was easier than managing Kubernetes ingress rules). This preparation phase set the stage, aligning everyone on the plan and the tools we’d use.

2. Building & Testing in Parallel: With the groundwork laid, we proceeded to deploy a few services on ECS in a test environment. We started with some non-critical internal services – this was our equivalent of a pilot run. We took their container images (the beauty of containers is that our actual app code didn’t need changes) and defined ECS Task Definitions for each. We configured these services behind a new Application Load Balancer, set up CloudWatch logging, and made sure all IAM permissions were in place for the tasks. It took some tweaking to get the first services running – we bumped into IAM permission errors initially (forgot to allow our ECS tasks to access certain AWS resources) and had to adjust some Docker health check settings for the ECS environment. But within a few days, we had a handful of services running in ECS exactly as they were in EKS.

We then ran comparative tests. We replayed some production traffic (in a controlled way) to those ECS-based services and monitored performance. To our relief, performance on ECS was on par or better. One reason for better performance was that in ECS/Fargate, each task got its dedicated slice of CPU and memory, whereas in Kubernetes our pods were competing on shared nodes, occasionally causing noisy-neighbor issues. The isolation ECS provided gave us consistent performance results. This testing phase was crucial: it built confidence among the team and stakeholders that “Yes, ECS can handle it.”

We also made sure to test failure scenarios – killing containers, failing health checks – to see how ECS auto-recovery works. It did what it should: if a container died, ECS spun a new one without any custom scripts on our part. This level of resilience out-of-the-box had some of our engineers almost giddy (for once, we weren’t writing custom handlers for these situations!).

3. Gradual Service-by-Service Migration: Satisfied with our dry run, we moved on to the meat of the migration: shifting all production services from EKS to ECS, one at a time. We deliberately chose a gradual migration strategy. Rather than cut everything over in one big bang (which would be risky and stressful), we treated each service migration as a mini-project. We’d pick a service, ensure an ECS version of it was ready, and then switch traffic to the ECS service.

To minimize risk, we used a blue-green deployment approach for each service. Concretely, for a given microservice, we would: deploy it on ECS (the “green” version) while it was still running on EKS (the “blue” version), then update the DNS or load balancer to point to the ECS task. At this moment, the service is live on ECS. We’d closely monitor metrics – error rates, latency, CPU/memory – now coming from ECS/CloudWatch. If anything looked off, we could quickly roll back traffic to the EKS version (which we kept running in standby until we were confident). In most cases, the switch was seamless and invisible to end-users. After a successful switch and a stabilizing period, we decommissioned the service on EKS to free up resources. Then it was on to the next one.

This process taught us a lot. For instance, one of our critical services had a dependency on shared storage (EFS volume) that was mounted in Kubernetes. We had to configure ECS to mount the same EFS volume. It took a bit of digging into ECS task definitions, but AWS had clear documentation and we got it working. Another example: some of our apps relied on Kubernetes ConfigMaps for configuration. In ECS, the equivalent was to use either environment variables (for simpler configs) or mount configuration files from S3 or Secrets Manager. We decided to build a small tool to sync our config data to S3 and have the ECS tasks pull it on start. It wasn’t too difficult, and in hindsight, it made our config management more streamlined and secure (we ended up leveraging AWS Secrets Manager more, which was a bonus).

Throughout this migration marathon, communication was key. We kept all stakeholders (from developers to QA to product managers) informed about which services were moving when. This wasn’t just an “ops project”; it was a company project. By involving everyone, we also got valuable feedback. Developers, for example, started adjusting their CI/CD pipelines early to deploy to ECS. Our pipeline changes involved replacing kubectl apply steps with ECS deployment steps (using AWS Copilot CLI or AWS CLI commands for ECS). With each service migrated, the process became smoother for the next, as we reused the patterns and scripts we’d created. It truly felt like we were gaining momentum and confidence as we went along.

4. Facing Challenges Head-On: No migration is without surprises. We hit a few bumps and learned some lessons:

-

Stateful Workloads: Most of our services are stateless, which is ideal for container orchestration. But we had a couple of stateful components (like a service that did in-memory caching for fast responses). On EKS, it wasn’t truly persistent, but the way it was architected assumed a pod might stick around for a while. In ECS with Fargate, containers are truly ephemeral. We refactored this service to use an external Redis cache, decoupling it from any single container’s lifespan. This added a bit of refactoring work, but it improved the app’s design overall.

-

Monitoring and Debugging Mindset: We had grown accustomed to certain Kubernetes-centric debugging tools (like

kubectl execinto pods). With ECS, the approach is different – you rely more on logs, AWS X-Ray tracing, and metrics to know what’s going on inside a container. We enabled AWS CloudWatch Logs for all tasks and set up central dashboards. We also used AWS CloudTrail and ECS event streams to see any failures in scheduling tasks. The team adapted to these new tools pretty quickly, especially after we held a “ECS monitoring 101” session. Now, our observability is arguably better than it was before – we even set up CloudWatch alarms to notify us if any ECS service’s desired vs running task count mismatched (indicating a task crash).

Each challenge we encountered was met with collaboration and a can-do spirit from the team. In our Slack channels, screenshots of successful ECS deployments started piling up, usually accompanied by celebratory emojis. Slowly but surely, service by service, we saw our Kubernetes dashboard quiet down and our ECS console light up with healthy green status checks.

5. The Cutover and Cleanup: After weeks of diligent effort, we reached the final stretch – the last few services were migrated. It was a satisfying moment when we shut down our EKS cluster for the last time. One of our engineers couldn’t resist posting a light-hearted eulogy for our Kubernetes cluster in the team chat. While Kubernetes had served us, none of us were going to miss the pager duty alerts it sometimes caused. With EKS gone, we tore down the now-unused infrastructure (goodbye to those EKS worker nodes and their costs). We transitioned fully into maintenance mode on ECS. This meant updating our runbooks, our documentation, and architecture diagrams to reflect the new reality. It was important to cement this change for operational continuity – future hires won’t need to know the old way, only the new.

When all was said and done, the migration was complete. We managed to pull it off with zero downtime for customers, and only minimal hiccups that we addressed on the fly. It wasn’t magic – it was a combination of careful planning, team expertise, late-night efforts, and yes, the capabilities of AWS that made it possible.

New Horizons – Life After Migration & Key Takeaways

Standing on the other side of this migration, we can confidently say the journey was worth it. The differences in our day-to-day operations and the benefits to More Retail as a business have been significant. Here’s what changed for the better after moving to ECS:

-

Freedom from Cluster Maintenance: The relief among our ops team was tangible. Tasks like patching the cluster, tuning the autoscaler, or worrying about etcd backups simply vanished. AWS now handles the control plane and scheduling logic. As a result, our team’s on-call load has dropped. It’s not just anecdotal – we looked at our incident logs and found a sharp reduction in infrastructure-related incidents. Previously, if a node went unhealthy or a Kubernetes daemon crashed, it would trigger alerts. Now, AWS ECS and Fargate abstract that away; if a VM underlying our Fargate tasks has an issue, AWS moves our tasks elsewhere without us even knowing.

-

Cost Wins (Infrastructure and Beyond): Now that a couple of months have passed, we’ve seen concrete cost benefits. Our AWS bills for container infrastructure are lower by roughly 20% compared to the EKS era. Several factors contributed to this: we no longer run extra idle capacity “just in case” – with ECS on Fargate, we pay for exactly what we use when we use it. We also made heavy use of Fargate Spot for non-critical and batch workloads, which saved us a lot (Fargate Spot can be 70% cheaper than regular). While cost savings were great, the more important aspect is cost visibility and control. Now each service’s cost is more isolated (since each runs as a separate task or set of tasks). We can attribute costs more clearly and optimize per service. Moreover, by offloading Kubernetes management, we avoided needing to hire additional specialists. We feel that effect – our existing team is enough to manage the new setup, and their time is now spent on higher-value activities than babysitting infrastructure.

-

Performance and Reliability Boost: Since moving to ECS, our applications have been remarkably stable. Remember those random 502 errors we struggled with? They’re essentially gone. We credit a few things for this. ECS with Fargate gives us an isolated environment per task, so no more noisy neighbors or port conflicts as sometimes happened on Kubernetes. Also, AWS’s handling of networking in ECS seems very robust – we leaned into using Amazon’s ALB for each service’s ingress, and it’s rock solid (we no longer worry about tuning Nginx ingress controllers). Scaling events that would have been dramatic in Kubernetes (bringing up new nodes could take several minutes) are now handled gracefully by ECS. If a spike comes, ECS spins up new tasks within seconds. We had a real-world test of this during a flash sale in March: traffic jumped 3x in a few minutes for a particular service. In the past, our Kubernetes cluster would have struggled to scale fast enough, and we might see degraded performance until pods caught up. This time, ECS added tasks so quickly that our dashboards barely registered any rise in latency. It was a night-and-day difference. The business folks may not notice these technical details, but they do notice that our systems are handling peak loads without a hitch, which translates to smooth customer experiences.

-

Developer Velocity and Morale: One perhaps under-appreciated benefit is the impact on our development culture. Developers now deploy services without having to grasp Kubernetes internals. The deployment process has been simplified (our CI/CD pipelines handle the ECS deployments with less convoluted logic than before). New services are easier to provision – it’s basically define a task and go, no need to think about cluster capacity or YAML conflicts. As a result, we’ve seen feature development speed up. For example, our team delivered a new inventory tracking microservice in record time last month.

-

Security and Compliance: Initially, one of the reasons we chose Kubernetes was the control it gave us, which we assumed would help in security (namespace isolation, network policies, etc.). With ECS, we worried we’d have less control. In practice, AWS’s integration actually improved some security aspects. For instance, we use IAM Task Roles to ensure each task has the minimum permissions it needs – no more overly broad roles applied at the node level. Our network architecture is simpler now, which means fewer misconfiguration risks. All tasks run in our private subnets by default and only go out through controlled endpoints. We’ve leveraged security groups per service, which is conceptually simpler than Kubernetes NetworkPolicies. In short, we have confidence that our security posture is solid (if not better) after the migration. And compliance audits have become easier since we can point auditors to AWS’s compliance docs for ECS and show simpler network diagrams.

Conclusion: Scaling New Heights with a Simplified Stack

In the grand scheme of More Retail’s journey, migrating from EKS to ECS was a pivotal chapter. We started with a pressing need to improve stability and control costs – a business challenge that demanded a technical solution. By embracing AWS ECS, we found that solution, and along the way, we transformed how we operate. Our story illustrates that innovation isn’t always about adding more complexity or chasing the newest tools; sometimes it’s about removing complexity to let innovation flourish.

Today, More Retail’s infrastructure is leaner, more resilient, and primed for growth. We’ve reduced our cloud spending and operational overhead. We’ve gained confidence that when the next big sale hits or our user traffic spikes, our platform can scale effortlessly to meet demand. Perhaps most importantly, our team is now free to focus on what really matters – building features that improve the shopping experience and optimizing our systems in ways that directly benefit the business.

This migration has also set us up for the future. With ECS as our foundation, we can explore other AWS innovations more easily. For instance, integrating serverless functions (AWS Lambda) or AWS Step Functions for certain workflows is more straightforward now that we’re firmly in the AWS ecosystem. If we ever need hybrid cloud or on-prem containers, AWS ECS Anywhere could extend our current setup without introducing a new orchestration layer. In essence, we’ve aligned our technology with the direction of AWS, and that gives us a strong roadmap for the future.

To wrap up, our move from EKS to ECS was about making a choice: a choice to prioritize simplicity, cost-effectiveness, and deep integration with our platform provider. It wasn’t a decision against Kubernetes per se – it was a decision for what made the most sense for More Retail’s success. And judging by the results we’re seeing, it’s a decision we’re very happy with.

Just as a well-crafted recipe brings together the right ingredients for a delightful dish, this migration brought together the right technologies and teamwork to serve up success. More Retail is now more agile, efficient, and ready to scale than ever. In the ever-evolving journey of our tech stack, moving to ECS turned out to be that special ingredient that elevated the whole dish. Bon appétit to that future!

Key Takeaways from Our EKS to ECS Migration:

-

Focus on business needs: We reframed the tech migration as a solution to business problems (cost, reliability, speed), which helped guide decisions.

-

Iterative migration reduces risk: Moving one service at a time with fallbacks prevented big bang failures and built confidence progressively.

-

Leverage managed services strengths: By using ECS and Fargate, we let AWS handle undifferentiated heavy lifting – benefiting from their scalability and reliability out-of-the-box.

-

Cost and efficiency gains are real: We cut infrastructure waste and saw significant savings

-

Empower the team: Involve and educate the team early. Our engineers’ skills grew through this process, and now they’re comfortable with a new toolset.

-

Simpler can be better: At the end of the day, simplicity won. Our platform is easier to understand and manage, which is a strategic advantage as we onboard new team members and tackle new challenges.

This journey has reinforced a core principle for us: the best technology choices are those that let us focus on delivering value, not those that consume our focus to maintain. With AWS ECS, More Retail is focused, fortified, and future-ready. Here’s to scaling new heights on a stable foundation!

Chalapathy V

SDE 2 @ More Retail